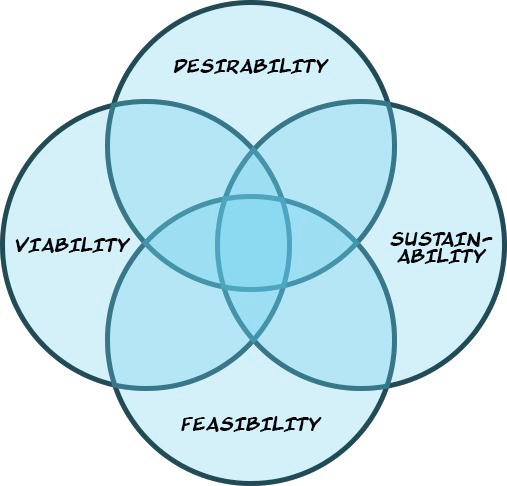

Desirability, Viability, Feasibility, Sustainability

Building a product as part of any kind of business is risky. Most new businesses fail, for a variety of reasons. Your job in the early stages is to mitigate those risks and navigate your company to a point where you're building something that people actually want, that can serve as the heart of a viable business, and which you can provide at scale with the team, resources, and time reasonably at your disposal.

One of the things I learned while investing at Matter was that your mindset matters more than anything. The founders who were most likely to succeed were able to identify their core assumptions, test those assumptions, be honest with themselves when they had it wrong, and act quickly to course-correct - based on imperfect information. Conversely, the founders most likely to fail were the ones who refused to face negative feedback and carried on with their vision. The former wanted to build a successful company; the latter wanted to pretend to be Steve Jobs.

De-risking a venture is all about continuously evaluating it through three distinct lenses:

Desirability: are you building something that meets a real user's needs? (Will the dog hunt?)

Viability: if you are successful, can your venture succeed as a profitable, growing business? (Will the dog eat the dog food?)

Feasibility: can you provide this service at scale with the team, time, and resources reasonably at your disposal? (Can we build the dog?)

Building a product through an iterative, human-centered process means putting on each one of these hats in turn. Is this product desirable, leaving aside viability and feasibility considerations? If not, what changes do you need in order to make it so? And then repeat for viability and feasibility.

This is at the heart of the design thinking process taught by Matter and others. It changed the way I think about building products forever.

I used to believe that if you just got the right smart people in a room, they could produce something great together. I wanted to build something and then put it out into the world. That's both a risky and egotistical strategy: it implies that you think you're so smart that you know what everybody wants. It's also often undertaken with a "scratch your own itch" mentality: build something to solve your own needs. As a result, the needs of wealthy San Franciscan millennials who went to Stanford are significantly overserved.

Market realities usually bring people back down to earth, but if you've spent a year developing a product, you've already burned a lot of time and resources. Conversely, if you're testing on day one, and day two, and day three, and so on, you don't need to wait to understand how people will react.

It's a great framework. There is, however, a missing lens.

I was pleased to see that Gartner has listed ethics and privacy as one of its ten key strategic technology trends for 2019. The world has changed, and market demands for technology products are very different to even three years ago. In the wake of countless data leaks and a compromised election, people are looking for more respectful software:

Technology development done in a vacuum with little or no consideration for societal impacts is therefore itself the catalyst for the accelerated concern about digital ethics and privacy that Gartner is here identifying rising into strategic view.

The human-centered design thinking process is correct. But it needs a fourth step that makes evaluating societal impact a core part of the process.

In addition to desirability, viability, and feasibility, I define the fourth step as follows:

Sustainability: does this venture have a non-negative social and environmental impact, and does it respect the human rights of the user?

Of course, it could easily be argued that "non-negative" should be "positive" here - and for mission-driven ventures it probably should be. Unfortunately, in our current climate, non-negative is such a step up from the status quo that I'm inclined to think that asking every new business to have a meaningfully positive impact is unrealistic. It would be nice if this wasn't the case. A positive impact also leads to questions like: how can we quantify our impact? Those are good questions to ask, but not necessarily core to the heart of every venture.

If you're confused about how "human rights" are defined, the UN Universal Declaration of Human Rights is a good resource. It was written in 1945, after the Second World War, and covers everything from equality through privacy, freedom from discrimination, and the right to a real court trial. There's also the European Convention on Human Rights, which has a broader scope, while being more narrowly focused on citizens of Europe. The purpose of including human rights in this context is to force questions like: are we discriminating against certain groups of people? And: can our platform be used to further genocide?

The technology industry used to have the luxury of operating in a vacuum, without having to consider the broader societal impact in which it operates. Its success means that its products are ingrained in every aspect of our lives. This brings new responsibilities, and the old days, when engineers and technologists could afford to be apolitical and apart from the world, are long gone. It's time that the ways in which we build products are brought up to date with our new reality.