Why the open social web matters now

The needs are real – and you have so much power.

A framework for newsroom build vs buy decisions.

How law enforcement and private parties can access your information without your knowledge, and what you can do about it.

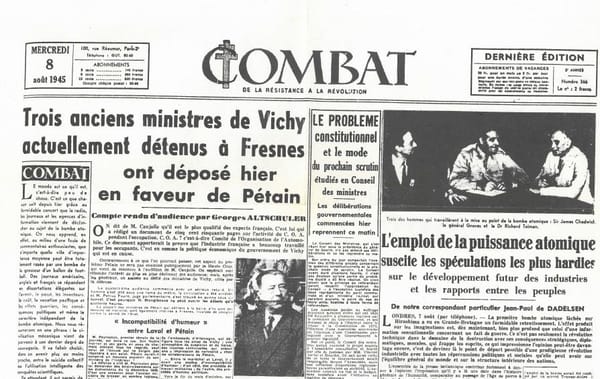

Preparing viable alternatives for broadcast censorship and a restricted internet.

The needs are real – and you have so much power.

A framework for newsroom build vs buy decisions.

How law enforcement and private parties can access your information without your knowledge, and what you can do about it.

Preparing viable alternatives for broadcast censorship and a restricted internet.

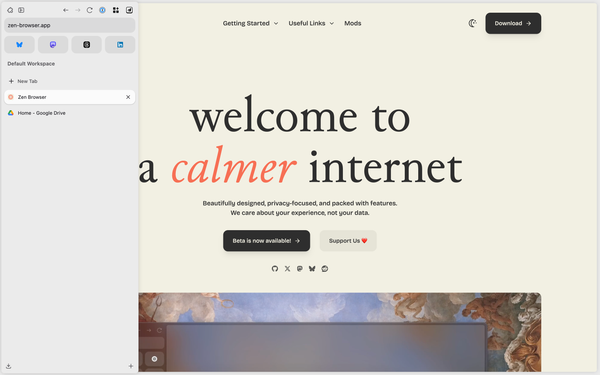

After Arc's pivot to AI left power users behind, I found the browser I actually wanted.

How self-tracking became self-incrimination

Ben Werdmuller explores the intersection of technology, democracy, and society. Always independently published, reader-supported, and free to read.

Many of our colleagues' communities are being directly targeted by an increasingly authoritarian, anti-immigrant regime. As managers and leaders, we have a duty of care. It starts with listening.

It turns out that "we're protecting democracy and our industry is dying, please pay us money" is not a great sales pitch.

This simple, repeatable framework for building a culture of open feedback has been a part of my toolkit for over a decade.

How law enforcement and private parties can access your information without your knowledge, and what you can do about it.

Preparing viable alternatives for broadcast censorship and a restricted internet.

Casey Newton's annual updates are always great. This year, perhaps predictably, the biggest highlight for me is his ambitions around community. We need stronger community platforms to support newsrooms.

Has the internet led to more polarization, or is this more of a great unmasking, where people feel more comfortable to share opinions they've always held?

Let's help build the world we want to see.

From self-hosted WordPress to managed solutions

Useful tips on creating an unlimited PTO policy. I used to think these were more accounting hacks than real benefits - but I've changed my mind over time.

How community-first thinking can help newsrooms survive AI

An open source project aimed at making medical research easier.