Known, the company I founded with Erin Jo Richey, is the third startup I've been deeply involved in. The first created Elgg, the open source social networking platform; I was CTO. The second is latakoo, which helps video professionals at organizations like NBC News send video quickly and in the correct format without needing to worry about compression or codecs. Again, I was CTO. In both cases, I was heavily involved in all aspects of the business, but my primary role was tending product, infrastructure and engineering.

Known, the company I founded with Erin Jo Richey, is the third startup I've been deeply involved in. The first created Elgg, the open source social networking platform; I was CTO. The second is latakoo, which helps video professionals at organizations like NBC News send video quickly and in the correct format without needing to worry about compression or codecs. Again, I was CTO. In both cases, I was heavily involved in all aspects of the business, but my primary role was tending product, infrastructure and engineering.

At Known, I still write code and tend servers, but my role is to put myself out of that job. Despite having worked closely with two CEOs over ten years, and having spent a lot of time with CEOs of other companies, I've learned a lot while I've been doing this. I've also had conversations with developers that have revealed some incorrect but commonly-held assumptions.

Here are some notes I've made. Some of these I knew before; some of these I've learned on the job. But they've all come up in conversation, so I thought I'd make a list for anyone else who arrives at being a business founder via the engineering route. We're still finding our way - Known is not, yet, a unicorn - but here's what I have so far.

The less I code, the better my business does.

I could spend my time building software all day long, but that's only a fraction of the story. There's a lot more to building a great product than writing code: you're going to need to talk to people, constantly, to empathize with the problems they actually have. (More on this in a second.) Most importantly, there's a lot more to building a great business than building a great product. You know how startup founders constantly, infuriatingly, talk about "hustling"? The language might be pure machismo, but the sentiment isn't bullshit.

When I'm sitting and coding, I'm not talking to people, I'm not selling, I'm not gaining insight and there's a real danger my business's wheels are spinning without gaining any traction.

The biggest mistake I made on Known is sitting down and building for the first six months of our life, as we went through the Matter program. If I could do it again, I would spend almost none of that time behind my screen.

Don't scratch your own itch.

In the open source world, there's a "scratch your own itch" mentality: build software to solve your own problems. It's true that you can gain insight to a problem that way. But you're probably not going to want to pay yourself for your own product, so you'd better be solving problems for a lot of other people, too. That means you need to learn what peoples' itches are, and most importantly, get over the idea that you know better than them.

Many developers, because they know computers better than their users, think they know problems better than them, too. The thing is, as a developer, your problems are very different indeed. You use computers dramatically differently to most people; you work in a different context to most people. The only way to gain insight is to talk to lots and lots of people, constantly.

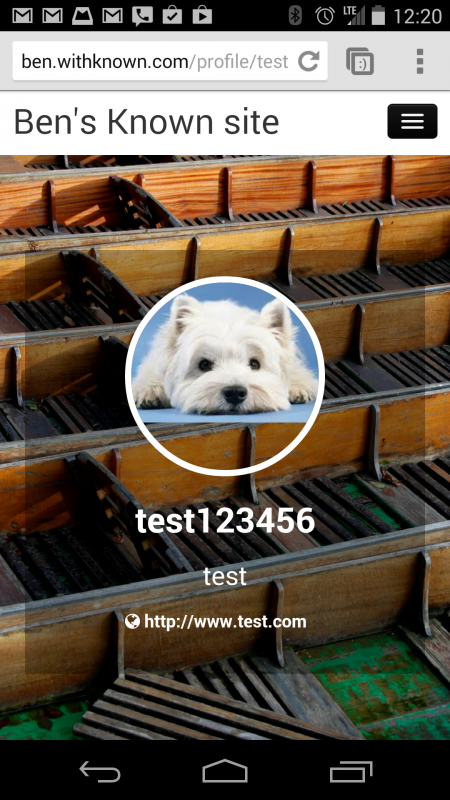

If you care passionately about a problem, the challenge is then to accept it when it's not shared with enough people to be a viable business. A concrete example: we learned the hard way that people, generally, won't pay for an indie web product for individuals, and took too long to explore other business avenues. (Partially because I care dearly about that problem and solution.) A platform for lots of people to share resources in a private group, with tight integration with intranets and learning management systems? We're learning that this is more valuable, and more in need. We're investigating much more, and I'm certain we'll continue to evolve.

Pick the right market; make the right product. Make money.

Learning to ask people for money is the single hardest thing I've had to do. I'm getting better at it, in part thanks to the storytelling techniques we picked up at Matter.

Product-market fit is key. It can't be overstated how important this is.

Product-market fit means being in a good market with a product that can satisfy that market.

The problem you pick is directly related to how effectively you can sell - not just because you need to be solving real pain for people, but because different problems have different values. A "good market" is one that can support a business well, both in terms of growth and finance. Satisfy that market, and, well, you're in business.

We sell Known Pro for $10 a month: hardly a bank-breaking amount. Nonetheless, we've had plenty of feedback that it's much too expensive. That's partially because the problem we were solving wasn't painful enough, and partially because consumers are used to getting their applications for free, with ads to support them.

So part of "hustling" is about picking a really important problem for a valuable market and solving it well. Another part is making sure the people who can benefit from it know about it. The Field of Dreams fallacy - "if you build it, they will come" - takes a lot of work to avoid. I have a recurring task in Asana that tells me to reach out to new potential customers every day, multiple times a day, but sales is really about relationships, which takes time. Have conversations. Gain insight. See if you can solve their problems well. Social media is fun but virtually useless for this: you need to talk to people directly.

And here's something I've only latterly learned: point-blank ask people to pay. Be confident that what you're offering is valuable. If you've done your research, and built your product well, it is. (And if nobody says "yes", then it's time to go through that process again.)

Do things that don't scale in order to learn.

Startups need to do things that scale over time. It's better to design a refrigerator once and sell lots of them than to build bespoke refrigerators. But in the beginning, spending time solving individual problems, and holding peoples' hands, can give you insight that you can use to build those really scalable solutions.

Professional services like writing bespoke software are not a great way to run a startup - they're inherently unscalable - but they can be an interesting way to learn about which problems people find valuable. They're also a good way to bootstrap, in the early stages, as long as you don't become too dependent on them.

Be bloody-minded, but only about the right things.

Lots of people will tell you you're going to fail. You have to ignore those voices, while also knowing when you really are going to fail. That's why you keep talking to people, making prototypes, searching for that elusive product-market fit.

Choosing what to be bloody-minded about can be nuanced. For example:

Technology doesn't matter (except when it does).

Developers often fall down rabbit holes discussing the relative merits of operating systems and programming languages. Guess what: users don't care. Whether you use one framework or another isn't important to your bottom line - unless it will affect hiring or scalability later on. It's far better to use what you know.

But sometimes the technology you choose is integral to the problem. I care about the web, and figured that a responsive interface that works on any web browser would make us portable acros platforms. This was flat-out wrong: we needed to build an app. We still need to build an app.

The entire Internet landscape has changed over the last six years, and we were building for an outdated version that doesn't really exist anymore. As technologists, we tend to fall in love with particular products or solutions. Customers don't really work that way, and we need to meet them where they're at.

Non-technical customers don't like options.

As a technical person, I like to customize my software. I want lots of options, and I always have: I remember changing my desktop fonts and colors as a teenager, or writing scripts for the chatrooms I used to join. So I wasn't prepared, when we started to do more conversations with real people, for how little they want that. Apple is right: things should just work. Options are complexity; software should just do the right things.

I think that's one reason why there's a movement towards smaller apps and services that just do one thing. You can focus on solving one thing well, without making it configurable within an inch of its life. If a user wants it to work a different way, they can choose a different app. That's totally not how I wish computers worked for people, but if there's one thing I've learned, it's this: what I want is irrelevant.

Run.

Run fast. Keep adjusting your direction. But run like the wind. You're never the only person in the race.

Investment isn't just not-evil: it's often crucial.

Bootstrapping is very hard for any business, but particularly tough if you're trying to launch a consumer product, which needs very wide exposure to gain traction and win in the marketplace. Unless you're independently wealthy or have an amazing network of people who are, you will need to find support. Money aside, the right investors become members of your team, helping you find success. Their insights and contacts will be invaluable.

But that means you have to have your story straight. Sarah Milstein puts it perfectly:

Entrepreneurs understandably get upset when VCs don’t grasp your business’s potential or tell you your idea is too complex. While those things happen, and they’re shitty, it’s not just that VCs are under-informed. It’s also that their LPs won’t support investments they don’t understand. Additionally, to keep attracting LP money, VCs need to put their money in startups that other investors will like down the road. VCs thus have little incentive to try to wrap their heads around your obscure idea, even if it’s possibly ground-breaking. VCs are money managers; they do not exist to throw dollars into almost any idea.

Keep it simple, stupid. Your ultra-cunning complicated mousetrap or niche technical concept may not be investable. You know you're doing something awesome, but the perception of your team, product, market and solution has to be that it has a strong chance of success. Yes, that rules some ventures out from large-scape investment and partially explains why the current Silicon Valley landscape looks like it does. So, find another way:

Be scrappy.

Don't be afraid of hacks or doing things "the wrong way". If you follow all the rules, or you're afraid of going off-road and trying something new, you'll fail. Beware of recipes (but definitely learn from other peoples' experiences).

Most of all: get over yourself, and get over why you fell in love with computers.

If empathy-building conversations and user testing tell you one thing, it's this: your assumptions are almost always wrong. So don't assume you have all the answers.

You probably got into computers well before most people. Those people have never known the computing environment you loved, and it's never coming back. You're building for them, because they're the customer: in many ways the hardest thing is to let go of what you love about computers, and completely embrace what other people need. A business is about serving customers. Serve them well by respecting their opinions and their needs. You are not the customer.

It's a hard lesson to learn, but the more I embrace it, the better I do.

Need a way to privately share and discuss resources with up to 200 people? Check out Known Pro or get in touch to learn about our enterprise services.

Share this post

Share this post

Education has a software problem.

Education has a software problem.

Sometimes it's important to step out of your life for a while.

Sometimes it's important to step out of your life for a while. The reason Facebook dominates the Internet is that while we were busy having endless discussions about open protocols, about software licenses, about feed formats and about ownership, they were busy fucking making money.

The reason Facebook dominates the Internet is that while we were busy having endless discussions about open protocols, about software licenses, about feed formats and about ownership, they were busy fucking making money. Twitter - by far the social network that

Twitter - by far the social network that  Ten years ago last November, we released the first version of

Ten years ago last November, we released the first version of  Just over four years since I permanently moved to California, I'm beginning to understand the differences in work style between the US and Europe. America still has a largely time-based view of productivity, even in Silicon Valley. But with tech industries in other nations catching up fast, and remote working becoming a more viable option, you need to compete for talent with companies all over the world. You have to be a full-stack employer.

Just over four years since I permanently moved to California, I'm beginning to understand the differences in work style between the US and Europe. America still has a largely time-based view of productivity, even in Silicon Valley. But with tech industries in other nations catching up fast, and remote working becoming a more viable option, you need to compete for talent with companies all over the world. You have to be a full-stack employer.

There was one conversation I used to hate more than any other. I used to brace myself for it; grit my teeth in anticipation.

There was one conversation I used to hate more than any other. I used to brace myself for it; grit my teeth in anticipation. But I had also discovered the Internet, which felt like a magical, invisible layer on top of the world. Hidden in invisible corners there, where no-one else could find us, on newsgroups and IRC, not limited by geography, space or time, we reached out to each other. I believe we were the first generation of teenagers to make friends in this way. I think we were also probably the last to be allowed to travel and meet each other without supervision. We traveled across Britain to show up at "meets", where we acted like British teenagers do, loitering outside pubs, hanging out in parks, and cementing friendships that could not have been created any other way.

But I had also discovered the Internet, which felt like a magical, invisible layer on top of the world. Hidden in invisible corners there, where no-one else could find us, on newsgroups and IRC, not limited by geography, space or time, we reached out to each other. I believe we were the first generation of teenagers to make friends in this way. I think we were also probably the last to be allowed to travel and meet each other without supervision. We traveled across Britain to show up at "meets", where we acted like British teenagers do, loitering outside pubs, hanging out in parks, and cementing friendships that could not have been created any other way.